Introduction

Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications. It was started by Google in 2014, and it’s now one of the most popular open source projects out there.

Today more and more applications get deployed on Docker containers and naturally, as projects grow, using a good orchestration tool becomes a necessity. Kubernetes is mature enough to be used in production. In fact, there are already a lot of user case studies, which I encourage to read.

I just recently started to play with Kubernetes, and so far I’ve only scratched the surface, but I wanted to share what I did to make it run locally.

A few things about the setup that will be used here :

- Everything runs on Vagrant (using VirtualBox as a provider)

- 4 Kubernetes instances in total (1 master and 3 nodes)

- All machines run on Ubuntu 16.04

- Kubernetes is configured via Kubeadm

- Ansible is used to provision the machines

All the code and config for this tutorial is located on this Github repository.

Let’s get started.

Installing Ansible

First we need to create a Python 2 virtual environment in which we’re going to install Ansible.

virtualenv venv

source venv/bin/activate

pip install ansible

Configuring Vagrant

In order to continue we need to install Vagrant and Virtualbox. Then we’re gonna use a Vagrantfile that will define the machines. Here’s how it looks like :

nodes = [

{ :hostname => 'kubernetes-master', :ip => '172.16.66.2', :ram => 4096 },

{ :hostname => 'kubernetes-node1', :ip => '172.16.66.3', :ram => 2048 },

{ :hostname => 'kubernetes-node2', :ip => '172.16.66.4', :ram => 2048 },

{ :hostname => 'kubernetes-node3', :ip => '172.16.66.5', :ram => 2048 }

]

Vagrant.configure("2") do |config|

nodes.each do |node|

config.vm.define node[:hostname] do |nodeconfig|

nodeconfig.vm.box = "bento/ubuntu-16.04";

nodeconfig.vm.hostname = node[:hostname] + ".box"

nodeconfig.vm.network :private_network, ip: node[:ip]

memory = node[:ram] ? node[:ram] : 256;

nodeconfig.vm.provider :virtualbox do |vb|

vb.customize [

"modifyvm", :id,

"--memory", memory.to_s,

"--cpus", "4"

]

end

end

end

end

You can see here the 4 machines. Feel free to tune the RAM.

Let’s start the VMs : vagrant up.

Installing Kubernetes

Now that our machines are running, it’s time to provision them. And since everything is better with some automation, we will use Ansible!

Again, please refer to the source code provided here.

As you can see, the Ansible setup here is pretty basic. We first have an inventory file containing information about our nodes :

[all-nodes]

172.16.66.2 ansible_user=vagrant ansible_ssh_pass=vagrant

172.16.66.3 ansible_user=vagrant ansible_ssh_pass=vagrant

172.16.66.4 ansible_user=vagrant ansible_ssh_pass=vagrant

172.16.66.5 ansible_user=vagrant ansible_ssh_pass=vagrant

[kube-master]

172.16.66.2 ansible_user=vagrant ansible_ssh_pass=vagrant

[kube-nodes]

172.16.66.3 ansible_user=vagrant ansible_ssh_pass=vagrant

172.16.66.4 ansible_user=vagrant ansible_ssh_pass=vagrant

172.16.66.5 ansible_user=vagrant ansible_ssh_pass=vagrant

We also have a vars.yml file, containing a few variables that will be used during the playbook execution.

kubernetes_master_ip: "172.16.66.2"

kubeadm_token: "jfuw63.1osue85lsh973hbf"

There are 3 steps for setting up Kubernetes here. We need to :

- install Kubernetes and related packages on every node.

- configure the master node.

- configure the other nodes.

The playbook.yml file describes these 3 parts :

- hosts: all-nodes

roles:

- kubernetes-common

- hosts: kube-master

roles:

- kubernetes-master

- hosts: kube-nodes

roles:

- kubernetes-nodes

The roles used by Ansible are located in the roles/ directory. Here’s a summary of what they do.

1. kubernetes-common

This role first installs everything each node needs to run Kubernetes components. That is :

- docker.io

- kubelet

- kubeadm

- kubectl

- kubernetes-cni

This is meant to work for Ubuntu 16.04. It hasn’t been tested on any other platform.

On each of the Kubernetes nodes, there’s a agent called kubelet. This agent makes sure all the containers managed by Kubernetes are running and healthy. This role also configures kubelet so that the node can communicate with the cluster.

It is overwriting the kubelet service definition in order to add --hostname-override=X.X.X.X to the command used to start the service, with X.X.X.X being the node’s IP address.

We do that because by default kubelet chooses the IP of the first network interface available, which is the NAT interface in the case of Vagrant boxes. This is a problem because all nodes have the same IP on the NAT interface. So we manually change that to match the IP we configured for each node in the Vagrantfile.

We’re gonna use the KUBELET_EXTRA_ARGS environment variable for this. Here’s what the service definition looks like after modification:

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--kubeconfig=/etc/kubernetes/kubelet.conf --require-kubeconfig=true"

Environment="KUBELET_SYSTEM_PODS_ARGS=--pod-manifest-path=/etc/kubernetes/manifests --allow-privileged=true"

Environment="KUBELET_NETWORK_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin"

Environment="KUBELET_DNS_ARGS=--cluster-dns=10.96.0.10 --cluster-domain=cluster.local"

# Additional code to fix the IP issue

Environment="KUBELET_EXTRA_ARGS=--hostname-override=X.X.X.X"

# End of additional code

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_SYSTEM_PODS_ARGS $KUBELET_NETWORK_ARGS $KUBELET_DNS_ARGS $KUBELET_EXTRA_ARGS

Then, the role restarts the kubelet service.

2. kubernetes-master

This role configures the master node, using kubeadm. It starts by running kubeadm init, that will setup and start almost everything the master node needs (etcd, kube-apiserver, kube-controller-manager, kube-dns, kube-proxy, …). You can see we add a token to that command line. This token will be used by the other nodes when they will try to talk to join the cluster later in the playbook.

There is a current issue (as discussed on Github) about kube-proxy not being configured properly when starting with Kubeadm (on Vagrant specifically), which causes network issues.

The workaround for this is to change the configuration of kube-proxy to add --proxy-mode=userspace when the container starts. This is included in the role.

Finally, the role installs the network solution that will be used by Kubernetes. For more information about networking and Kubernetes, you can read this page.

In this case we chose Weave, because it is simple to setup and it runs as a CNI plug-in (kubeadm only supports CNI based networks). But there are other interesting solutions, like Calico or Flannel.

3. kubernetes-nodes

This role just makes the node join the cluster, using kubeadm : kubeadm join.

Now that we’ve explored all parts of the playbook, let’s run it :

ansible-playbook playbook.yml -i inventory -e @vars.yml

Everything should be working correctly now. We can check that by connecting to the master node and run a few commands:

vagrant ssh kubernetes-master

$ sudo kubectl get nodes

NAME STATUS AGE

172.16.66.3 Ready 2m

172.16.66.4 Ready 2m

172.16.66.5 Ready 2m

172.16.66.2 Ready,master 3m

$ sudo kubectl get pods --namespace=kube-system

NAME READY STATUS RESTARTS AGE

dummy-2088944543-55b22 1/1 Running 0 4m

etcd-172.16.66.2 1/1 Running 0 3m

kube-apiserver-172.16.66.2 1/1 Running 0 4m

kube-controller-manager-172.16.66.2 1/1 Running 0 4m

kube-discovery-1769846148-310vf 1/1 Running 0 4m

kube-dns-2924299975-b9xp2 4/4 Running 0 4m

kube-proxy-4kqdg 1/1 Running 0 3m

kube-proxy-g4pf7 1/1 Running 0 4m

kube-proxy-h1sg5 1/1 Running 0 3m

kube-proxy-w5smp 1/1 Running 0 3m

kube-scheduler-172.16.66.2 1/1 Running 0 3m

weave-net-2hv7w 2/2 Running 0 3m

weave-net-f65cq 2/2 Running 0 3m

weave-net-jsbq2 2/2 Running 0 3m

weave-net-s4sgd 2/2 Running 0 4m

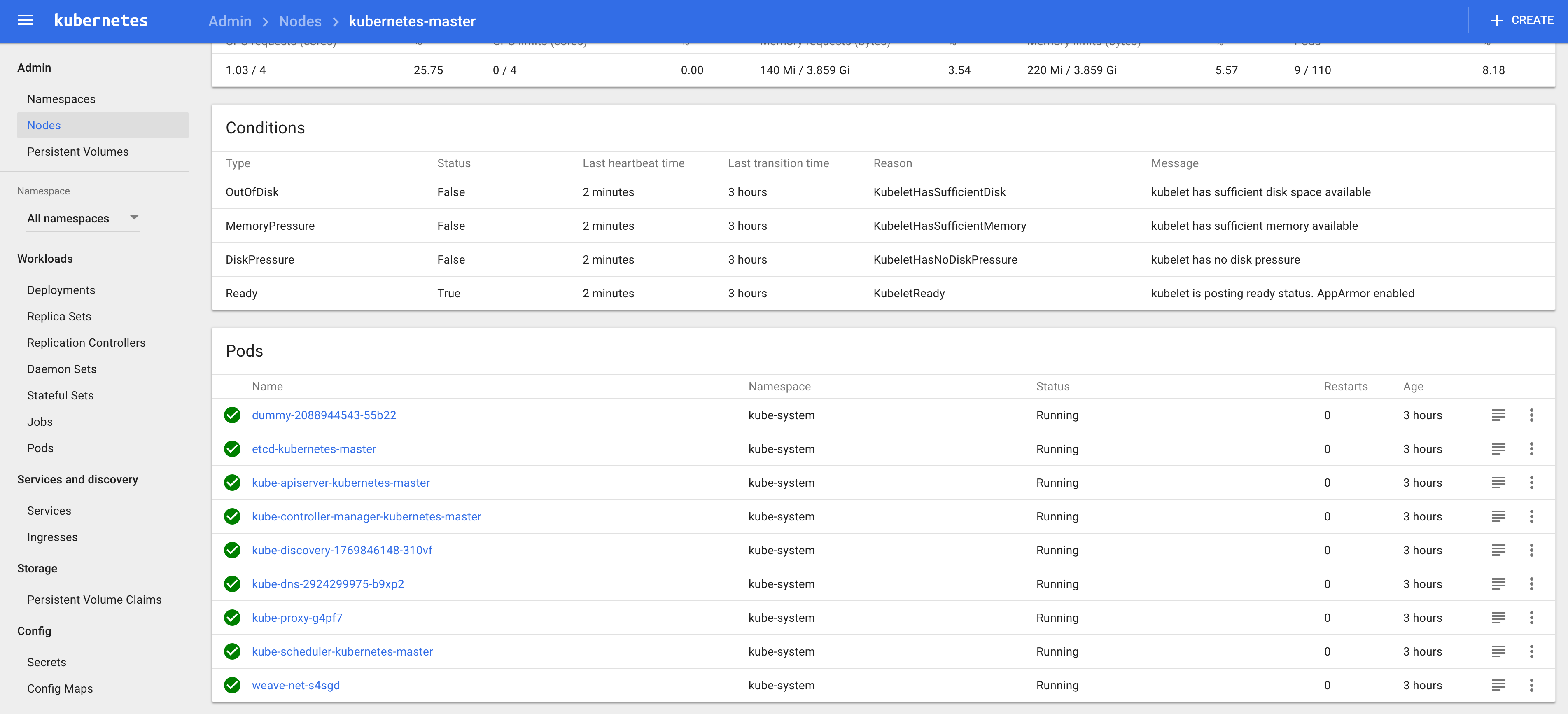

You can even install a UI Dashboard for Kubernetes, by running the following on the master node :

$ sudo kubectl create -f https://rawgit.com/kubernetes/dashboard/master/src/deploy/kubernetes-dashboard.yaml

Here’s what it looks like.

Conclusion

With this we now have a functionning Kubernetes cluster running on Vagrant. Kubeadm simplifies the installation of Kubernetes a lot, I really appreciate this tool. With just a few automated steps, it’s easy to have a cluster running locally within minutes.